Work smart, not hard!

How to use the LLMs such as OpenAI's ChatGPT, Google Gemini, or GitHub Copilot to do most of the works for you.

To survive in the rapidly developing fields of science, we highly recommend you not only learn new things every day but also keep refining the way you work. Using assistive tools like large-language models (LLMs), the secretary-like artificial intelligence specialized in processing human languages, can increase productivity and reduce redundancy work.

Some might argue that using assistive tools is considered cheating, or worse that it makes us develop bad habits. But to think of our main objective as a researcher, we make science for the benefit of humankind. Working smarter and more efficiently leads us quicker to such a goal.

“True success is not in the learning, but in its application to the benefit of mankind.” - Prince of Songkla

However, it is still true (and you should be aware) that those assistive tools are still under development and have flaws in their job. Solely relying on their practice, without human review, causes malpractices in scientific research and publication ethics like falsification, overclaiming, plagiarism, and much more. This is why most researchers, educational institutes, and publishers banned it and AI detectors are continually being used. According to this matter, you need to strengthen your expertise and professionality howsoever. You must always consider AIs as your assistance, not a substitute.

Using GitHub Copilot for coding assistance

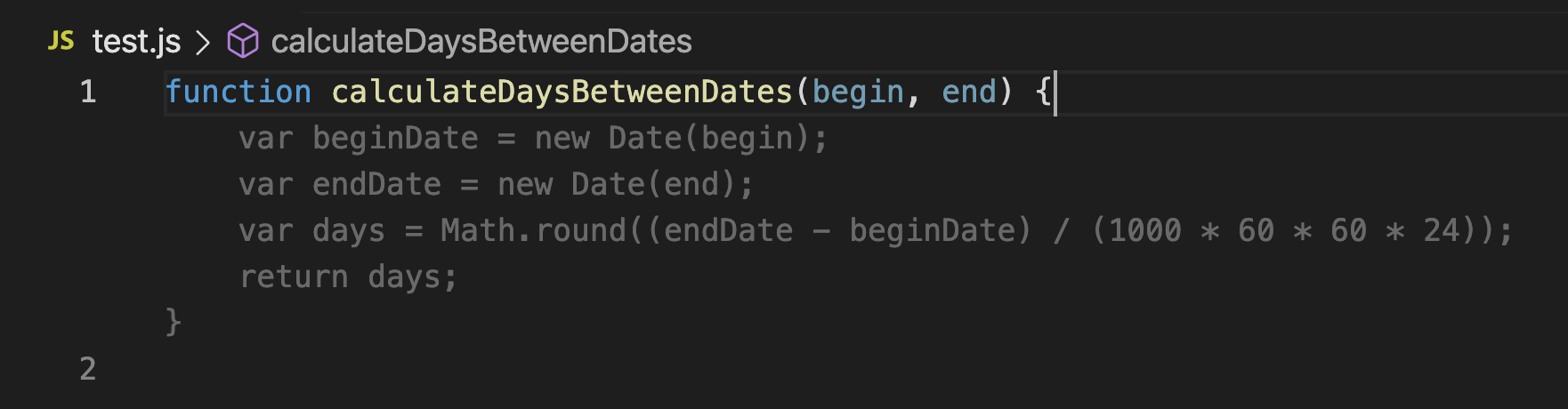

GitHub Copilot is an AI-powered tool that helps you program while you code. Their functions include suggesting code lines and answering programming-related questions with a chat interface. It works in many popular environments (IDE) such as Visual Studio Code and JetBrains IDEs. It may not code for you from scratch, but it saves you much time doing boring, repetitive code.

This service is free for students and teachers with GitHub Education. With some identification using your student ID card and institutional email, then you're good to go. For educators and developers, this service starts from 10$ a month.

Visual Studio Code integration

To use GitHub Copilot in Visual Studio Code, you need to install GitHub Copilot extension. Its chat function will be installed together automatically.

You have to login with GitHub account that have an active subsciption for GitHub Copilot, or GitHub for education.

When you activate GitHub Copilot, it will provide you these functionalities:

Code completion as you write: it will suggest you a code when you are defining a meaningful function name, or even a header comment while you code.

Answering coding questions: using chat function, it can provide you guidance and support for your coding difficulties and suggestions.

In-editor code explanation and suggestion: in the editor section, you can ask it to provide explanation, suggestion, etc.

Fix the bug: using

/fixcommand then an error or problem you struggle with, it will give you solutions based on the context of your prompt.Initiate new project: using

@workspace /newor/newNotebookfor Jupyter notebook with your intension for your new file. It can even give you a scaffold of your need for you to getting started.

Much more use cases and its documentation can be found at the Visual Studio Code documentation.

Using LLMs for general suggestion and Paperworks

What a good time to be a researcher that modern technologies can (though barely) help suggest about your writing and even write the first draft for you! This world-changer's potential is seemingly unlimited and so powerful that it affected how most of us people work since its first viral release. Such abrupt change gave us hope, but also concerns about its reliability, malpractice uses, and overestimation of its performance.

According to such matters, we do recommend you to better understand how LLMs really work and how to get the best out of them.

Understanding LLMs

The large-language model (LLM) is an artificial intelligence that works by processing the patterns in our natural human language.

Originally, their purpose is to predict the next few words to complete the incomplete sentences into a comprehensible paragraph. To do so, they made AI learn how words relate to each other from a very large set of real-world books, articles, and paragraphs. But after they realized that such relation of words itself could mimic human's knowledge and imaginations, their invention's application become greater than their original task, valuable that it was decided to be commercialized later on.

To sum up, the LLM is a model that packed our arts and sciences in the form of mathematical relationship and response to us using their best prediction based on such relations. As simple a principle as that.

Get the best out of LLM with prompt engineering

Last updated